You need certainty when a controller meets a grid model, a motor drive, or a complex protection scheme. The choice between hardware‑in‑the‑loop and real‑time simulation shapes that certainty more than any single tooling decision. Teams seeking tighter timing margins, better safety, and faster iteration benefit from clear guidance on what to use, and when to use it. The good news is that both approaches fit neatly into modern test workflows once you understand their purpose, limits, and strengths.

Projects rarely fail on physics alone; they slip because timing, interfaces, or assumptions were never tested under stress. Hardware‑in‑the‑loop and real‑time simulation systems give you a safe way to stress the right things before field trials. You can exercise controllers, power converters, and protection logic against models that run at electrical time scales. You can also reproduce edge cases, measure timing precisely, and repeat tests without risking equipment.

What engineers need to know about hardware in the loop vs real time simulation

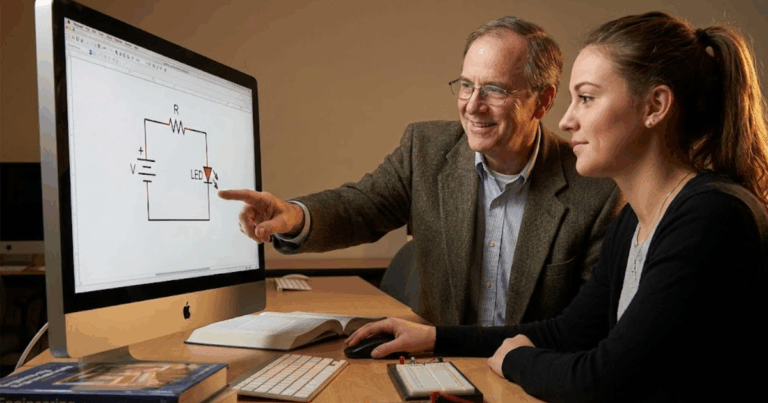

Hardware‑in‑the‑loop (HIL) connects a physical controller or device under test to a digital simulator that runs an executable plant model in real time. The simulator computes voltages, currents, and states in fixed time steps, then exchanges signals with the hardware through analogue and digital I/O, communication buses, or power interfaces. This setup exposes firmware to realistic dynamics, delays, noise, and faults, which reveals issues that desktop modelling rarely shows. You keep risk low while learning how the controller behaves under tight timing and adverse conditions.

Real‑time simulation, without physical hardware present, focuses on running detailed power system models at wall‑clock speed for study, training, or software‑only testing. Engineers use it to validate protection logic, explore operating limits, and rehearse procedures on a high‑fidelity digital twin. Nothing prevents you from later attaching hardware, yet the pure simulation phase stands on its own for system analysis and team learning. The two approaches complement each other, and both reduce time to safe on‑site commissioning.

How hardware-in-the-loop simulation works for power system validation

Engineers evaluating HIL often ask how the loop closes, what time steps are required, and how safety is maintained. Hardware‑in-the-loop simulation relies on accurate models, deterministic execution, and properly conditioned signals. A digital simulator executes the plant model, while I/O hardware exchanges values with a controller at fixed rates. The outcome depends on numerics, interfacing quality, and thorough test design.

Closed‑loop connection between controller and simulated grid

HIL closes the loop by feeding simulated measurements to a controller, then applying the controller’s outputs back to the model inside the same cycle. The controller reads analogue values like phase currents, DC link voltage, or frequency, then drives pulse‑width modulation, contactors, or setpoints. The simulator computes the next plant state from these commands, maintaining a fixed step that matches or exceeds the controller’s update rate. This cycle exposes the controller to realistic dynamics while preserving lab safety.

A key design step is aligning sampling rates so aliasing and jitter do not corrupt control decisions. You choose a time step small enough to capture fast electrical transients, yet large enough to run on available compute. Timing determinism matters more than raw speed, since missed deadlines distort feedback. Reliable clocks, buffered I/O, and timestamped signals reduce uncertainty and keep the loop faithful.

Signal interfacing, I/O conditioning, and electrical isolation

Controllers expect specific signal levels, impedances, and noise profiles, so the I/O stage must match those expectations. Analogue outputs from the simulator should mimic sensor scaling, filtering, and offset behaviour that the controller firmware expects to see. Digital lines must respect voltage levels and timing windows for interrupts, fault inputs, or synchronisation pulses. Proper isolation protects both the simulator and the device under test during fault emulation.

Communication buses such as CAN, Ethernet‑based protocols, or serial links often carry configuration, status, and diagnostics. HIL setups map these messages to the plant model so that commands change simulated states, not just variables on a screen. Time‑synchronised buses help correlate controller actions with plant responses down to microseconds. Good message handling exposes firmware logic issues that only appear under load and noise.

You need certainty when a controller meets a grid model, a motor drive, or a complex protection scheme.

Model execution, time steps, and numerical stability

Power electronics, protection, and grid dynamics push time steps into the microsecond to sub‑millisecond range. Fixed‑step solvers keep execution predictable, and specialised solvers handle stiff systems found in converter switching and protection relays. Model partitioning across CPU and FPGA resources balances throughput with responsiveness. When the model meets its timing budget, the controller experiences physics with the fidelity needed for validation.

Numerical stability supports believable results during long runs, fault sequences, and parameter sweeps. Anti‑windup strategies, saturation models, and sensor noise all affect how firmware reacts, so you include them in the plant. Engineers routinely validate the model against off‑line references before loading it into the HIL target. Confidence grows when the HIL response tracks measured data, known operating points, and design‑stage simulations.

Fault injection, edge cases, and automated test orchestration

HIL earns its keep when you inject faults that would be unsafe or expensive on a physical setup. Line‑to‑line faults, islanding events, breaker mis‑operations, and sensor failures are simple to define in the simulator. You can chain scenarios, record responses, and compare runs with programmatic criteria for pass or fail. Repeatability improves, and regression testing becomes straightforward.

Automation frameworks schedule tests, sweep parameters, and collect time‑aligned signals across controllers, simulators, and meters. Engineers build a library of scenarios tied to requirements and standards, then rerun them after firmware changes. Hardware‑in-the-loop simulation makes failure modes visible sooner, which shortens the path to robust protection and control. Teams gain evidence that stands up to internal reviews and certification steps.

HIL fits naturally within model‑based design, control prototyping, and safety‑critical development. The approach safeguards equipment while exposing firmware to the physics that matter. You learn how algorithms behave when confronted with delays, saturation, and noise that desk models rarely capture. Confidence rises when validation covers both nominal operation and carefully staged faults.

Key differences between hardware-in-the-loop and real time simulation technologies

The main difference between hardware in the loop and real time simulation technologies is the presence of a physical device under test inside a closed feedback loop. HIL couples a controller or power device to a running plant model, while pure real‑time simulation keeps everything in the digital domain. HIL focuses on firmware timing, I/O handling, and safety under stress, whereas real‑time simulation emphasises system studies, procedures, and large‑scale scenarios. Both rely on deterministic execution and accurate models, yet they answer different engineering questions.

Real‑time simulation technologies for power systems design testing and analysis shine when you need scale, scenario variety, and operator training. HIL shines when you must see how an actual controller responds to the same scenarios, noise, and latencies. Teams often start with real‑time studies, then progress to HIL before field trials for end‑to‑end confidence. Using both improves coverage, reduces risk, and tightens schedule predictability.

| Aspect | Hardware‑in‑the‑loop (HIL) | Real‑time simulation |

| Primary purpose | Validate physical controller or device behaviour under realistic plant dynamics | Study system behaviour, validate logic, and rehearse procedures without hardware |

| Hardware present | Yes, device under test is connected | No physical device, digital twin only |

| Signal exchange | Analogue, digital, and communication buses with isolation and conditioning | Internal model signals, supervisory I/O optional |

| Typical time step | Microseconds to sub‑millisecond to match controller cycles | Milliseconds to sub‑millisecond based on study needs |

| Risk profile | Low risk compared to on‑site tests, yet real hardware is involved | Lowest risk, nothing physical at stake |

| Test scope | Controller timing, I/O handling, protection actions | System stability, power quality, operator procedures |

| Scalability | Limited by I/O channels and hardware capacity | Broad scalability across feeders, converters, and geographical scope |

| Cost focus | I/O hardware, isolation, device management | Compute capacity, model scope, visualization |

| When to use | Firmware validation before bench energization and site work | Planning studies, training, and software‑only validation |

| Keyword focus | Hardware‑in-the-loop simulation | Real‑time simulation systems |

The main difference between hardware in the loop and real time simulation technologies is the presence of a physical device under test inside a closed feedback loop.

How real time simulation systems support power system testing and analysis

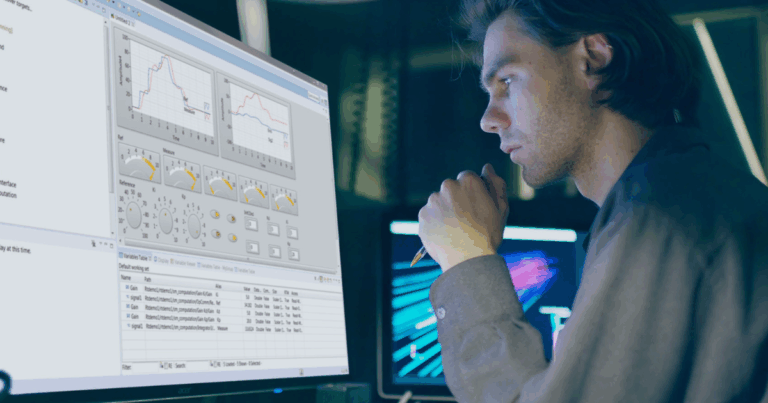

Teams handling protection, converter control, and microgrids rely on deterministic simulations that keep pace with wall‑clock time. Real‑time simulation systems remove guesswork around timing, help you repeat scenarios, and allow stakeholders to see outcomes clearly. You can exercise models with scripted events, live operator actions, or data feeds from supervisory systems. The result is better decisions about settings, ratings, and safe operating limits.

Protection scheme validation and timing analysis

Protection studies benefit from repeatable fault scenarios that reveal timing margins and coordination issues. Engineers simulate faults, breaker trips, and reclosing sequences to tune settings and confirm selectivity. Arc, ground, and phase faults can be staged at different locations to observe reach, speed, and reliability without risking equipment. These studies surface edge cases early and reduce surprises during commissioning.

Time alignment across relays, breakers, and communication channels is easier when the simulator provides a single time base. You can log sampled values, comparator outputs, and trip commands with microsecond resolution. Result data flows into standard analysis tools to compare settings, examine mis‑operations, and document compliance. Real time simulation of power system behaviour turns protection work into a repeatable, data‑rich process.

Converter and microgrid control development

Converter, inverter, and microgrid studies require electrical detail and controller‑friendly signals. Real‑time engines let you study switching effects, filter design, and DC link dynamics while maintaining deterministic steps. Engineers explore start‑up sequences, ride‑through performance, and current limits under faults and voltage dips. Findings inform controller parameterisation and hardware selection.

Microgrid studies also benefit from model libraries covering sources, loads, and protection elements. You can examine transitions between grid‑connected and islanded modes, verify droop settings, and test black‑start strategies. Scenarios include renewable intermittency, energy storage response, and load steps that happen without warning. Teams move faster when the simulator provides quick iteration on control ideas and operating procedures.

Power quality, harmonics, and stability studies

Power quality metrics such as total harmonic distortion, flicker, and voltage unbalance require precise sampling and repeatability. Real‑time simulation systems support phasor and time‑domain views so engineers quantify limits and mitigation options. Filters, control loops, and switching patterns can be compared side by side with identical excitation. This reduces guesswork around tuning and hardware selection.

Stability analysis across frequency ranges calls for models that mix fast converter dynamics with slower grid modes. Modal analysis, root‑locus exploration, and small‑signal studies inform controller gains that balance speed and robustness. The same platform then validates large‑signal behaviour such as faults, islanding events, and reconfiguration. Consistent timing and logging make findings credible across stakeholders.

Model‑based design, software workflows, and team productivity

Model‑based design workflows use a single source of truth that runs off‑line for design and on a target for real‑time tests. Engineers generate code, configure experiments, and archive datasets so results remain traceable. Automation hooks let you run nightly suites, track regressions, and compare datasets after firmware changes. Teams save time when test orchestration is scriptable and models are reusable.

Collaboration improves when electrical, controls, and protection specialists work from the same real‑time platform. Shared models reduce rework, and shared datasets make reviews efficient. Clear procedures around versioning, seeding, and logging build confidence across labs and project partners. The outcome is faster progress with fewer errors reaching field trials.

Real‑time simulation targets system‑level questions, settings, and operating practices. Teams learn faster because complex scenarios are safe to repeat, pause, and adjust. The approach helps you separate control issues from plant limits before hardware is on the bench. Better preparation reduces on‑site surprises and shortens the path to safe energization.

Benefits of using real time simulation of power system design and testing

Engineers care about outcomes, not theatre. Real time simulation of power system studies improves timing fidelity, keeps tests repeatable, and supports automation. You can measure cause and effect with confidence because the same timelines apply across devices and models. Stakeholders gain shared context that improves decisions about risk and cost.

- Reduced risk before field work: Testing on a simulator avoids unsafe energization, while still exposing control and protection logic to realistic scenarios. You learn from faults and edge cases without putting equipment or schedules at risk.

- Faster iteration on controls and settings: Deterministic steps and scripted events shorten the cycle from idea to evidence. Teams compare configurations quickly, which accelerates tuning and design sign‑off.

- Better visibility into timing and delays: Real‑time engines reveal jitter, latencies, and sampling issues that desk models hide. You identify bottlenecks early, then adjust implementation or hardware.

- Higher test coverage with automation: Repeatable scenarios make it easy to sweep parameters, inject faults, and compare outcomes. Regression suites protect against unintended changes and help maintain quality.

- Scalable studies across feeders and assets: Real‑time simulation systems scale from component‑level checks to feeder‑level or multi‑station studies. The same toolkit supports targeted tests and broad planning exercises.

- Stronger collaboration across teams: Shared models, common data formats, and coordinated procedures align electrical, controls, and operations specialists. Clear evidence builds trust, and reviews become more productive.

- Easier path to HIL and commissioning: Work done in pure simulation transfers to hardware‑in-the-loop simulation with minimal rework. Consistency across stages shortens learning curves and keeps momentum.

Clear benefits show up as fewer defects, safer tests, and smoother handoffs. Real‑time platforms reduce uncertainty across teams, vendors, and sites. The payoff increases when you integrate automation, model reuse, and disciplined data management. Confidence rises because results are repeatable, transparent, and tied to requirements.

Practical use cases of hardware in the loop and real time simulation in energy and transportation

Projects across energy and transportation depend on accurate timing and safe validation. Hardware‑in‑the‑loop and real‑time simulation cover complementary parts of that need. You start with system studies, then move to controller‑focused HIL before on‑site work. Evidence builds, risks drop, and teams align on acceptance criteria.

Grid‑connected inverter compliance and anti‑islanding testing

Inverter‑based resources must meet interconnection and ride‑through requirements with predictable behaviour. Real‑time platforms let you stage voltage dips, frequency excursions, and flicker to verify controls and filters. Engineers examine current limits, PLL stability, and protection settings under stressed conditions. Findings guide firmware updates and de‑risk certification steps.

HIL then exposes the actual controller to anti‑islanding logic, fault handling, and recovery sequences. Injected faults, distorted waveforms, and noisy measurements reveal weakness in timing or filtering. You can sweep operating points, adjust parameters, and confirm reliable trip thresholds. The approach shortens the path from firmware change to a passing test report.

Energy storage management and battery protection

Energy storage systems hinge on safe charge control, thermal management, and accurate state estimation. Real‑time simulation creates repeatable duty cycles, calendar ageing profiles, and temperature ranges. Engineers explore limits for power ramping, voltage windows, and balancing strategies without stressing assets. This improves sizing decisions, control tuning, and safety margins.

HIL connects the storage controller to simulated cells, converters, and grid conditions through conditioned I/O. Sensor faults, communication dropouts, and sudden load steps verify protective actions and fail‑safe modes. Automated sequences demonstrate that limits hold under abuse cases while avoiding damage. Teams enter commissioning with data that supports both performance and safety.

Traction inverter and on‑board charger validation for electric vehicles

Motor control and charging firmware must perform across wide operating ranges and supply conditions. Real‑time models cover transient torque steps, regenerative braking, and supply disturbances. Data traces tie torque ripple, current distortion, and thermal effects to control parameters. Engineers move faster when every change can be tested under identical conditions.

HIL places the actual controller in the loop with high‑bandwidth I/O that resembles sensors and actuators. You can test start‑up, shutdown, and fault handling without touching a vehicle. Automated tests cover watchdogs, limp modes, and energy‑limited scenarios that matter on the road. The process turns firmware risk into a manageable, instrumented exercise.

Microgrid and protection coordination for industrial sites

Microgrids mix sources, loads, and protection elements that must cooperate under varying conditions. Real‑time studies explore transitions to islanded operation, black‑start strategies, and load shedding thresholds. Engineers inspect selectivity among breakers, relay coordination, and converter ride‑through. These insights reduce downtime risk and improve operator readiness.

HIL verifies that controller logic responds correctly when measurements drift, communication slows, or faults stack up. The simulator delivers states that are hard to reproduce on‑site while keeping hardware safe. Teams refine logic, update setpoints, and confirm that alarms, trips, and reclose actions are secure. The same scripts become acceptance tests before energization.

Use cases show why both approaches belong in a mature validation plan. Real‑time studies reveal system behaviour and safe operating limits. HIL then proves that physical controllers act correctly under the same conditions, delays, and noise. Using both creates a clear, auditable trail from model to on‑site confidence.

Common challenges engineers face when adopting hardware in the loop and real time simulation

Adoption hurdles often have little to do with physics and a lot to do with process. Teams need models, I/O, and automation that match their goals. The learning curve shortens when you choose platforms that speak your toolchain and measurements. Budget, lab space, and safety planning also play a part.

- Model fidelity gaps: Plant models that ignore non‑ideal effects can hide timing or stability issues. Address this by validating models against measurements and off‑line references before running them on the target.

- Time‑step selection and overruns: Choosing steps that are too small causes missed deadlines, while steps that are too large hide dynamics. Measure execution margins, trim model scope, and use FPGA acceleration where it helps most.

- I/O integration and signal conditioning: Mismatched voltage levels, impedances, or timing windows lead to misleading results. Specify isolation, scaling, and filters that mimic sensors, then verify with bench measurements.

- Data management and traceability: Results lose value when you cannot tie them to model versions, settings, and firmware builds. Adopt consistent naming, metadata, and automated logging so findings remain auditable.

- Test orchestration and coverage: Manual tests are hard to repeat and easy to miss. Script scenarios, sweep parameters, and record pass or fail criteria so regressions become routine.

- Team skills and training: Controls, electrical, and software specialists may use different terms and tools. Create shared procedures, short playbooks, and simple starter models so everyone gains momentum quickly.

Clear plans and the right platform remove most obstacles. Teams benefit from templates, example projects, and reference I/O configurations. Good habits around timing budgets, metadata, and safety reviews pay back quickly. Confidence grows as tests become repeatable, fast, and easy to extend.

How OPAL‑RT supports engineers with power system real time simulators

OPAL‑RT helps engineers close the loop between theory and field performance using a power system real time simulator that runs detailed grid and converter models at deterministic steps. You can connect controllers through high‑quality I/O, communication buses, and isolation that match on‑bench conditions. Toolchain compatibility streamlines work that starts in MATLAB or Python, then moves to RT execution without rewrites. Teams gain practical automation hooks for test suites, data capture, and pass or fail reporting that tie directly to requirements.

OPAL‑RT platforms scale from component checks to feeder‑level studies, so you keep one workflow while projects grow. Model libraries and solver options support protection, converter dynamics, and microgrids without sacrificing timing margins. Engineers benefit from reference projects that shorten setup time, plus responsive support that helps tune time steps, I/O paths, and safety interlocks. This combination provides clear, defensible evidence that guides settings, firmware fixes, and safe commissioning, which builds trust, credibility, and authority.

FAQ

HIL connects your physical controller to a simulated plant at fixed time steps, so firmware timing, I/O, and safety logic are exercised under realistic conditions. Real time simulation keeps everything digital and runs detailed models at wall-clock speed for studies, procedures, and software-only validation. Use HIL to validate device behaviour; use real time simulation systems to analyse settings, sequences, and large scenarios. OPAL-RT supports both paths so you can progress from studies to controller testing with consistent tooling, data, and outcomes.

Pick the smallest step that captures the fastest dynamics you care about, then confirm you can meet deadlines with margin. Converter switching, protection comparators, and sampled values often call for microsecond to sub-millisecond steps, while broader stability studies can run slower. Start with fixed-step targets, profile overruns, and trim model scope or move tight loops to FPGA acceleration if needed. OPAL-RT helps you balance fidelity and execution so your real time simulation of power system behaviour remains deterministic and trustworthy.

Map your controller’s analogue ranges, digital thresholds, timing windows, and communication buses, then select I/O with proper isolation and conditioning. Confirm sensor scaling, filtering, and sync pulses match what the firmware expects to see, and budget for fault-injection channels. Plan for safe trips, interlocks, and logging so you can reproduce tests and compare builds. OPAL-RT provides power system real time simulator platforms with the I/O depth, sync, and automation you need to get meaningful HIL results fast.

Yes, provided your solver choices, partitioning, and I/O bandwidth align with the study scope. Start with component-level models, validate against references, and then expand to multi-device or feeder studies with consistent sampling and events. Use scripted scenarios and parameter sets so comparisons stay fair as scope grows. OPAL-RT supports stepwise scaling with open workflows, so you keep one toolchain from design studies to grid-level analysis.

Repeatable, time-aligned scenarios expose timing margins, protection selectivity issues, and controller edge cases long before energization. You can inject faults, stage transients, and measure response precisely without touching equipment. Findings translate into clear settings, firmware fixes, and procedures that are easy to review and re-run. OPAL-RT helps you turn these practices into a reliable pipeline, so decisions rest on evidence, not guesswork.